How AI Changed Categories While You Were Measuring Speed

Four years ago, I was genuinely excited. I could write a professional article in one hour instead of half a day. That felt revolutionary.

Last week, I built a complete software development kit in ten minutes. Not the article about an SDK. The actual SDK. Functional code across multiple files, tested, documented, ready to deploy.

That SDK now runs automatically, doing tasks that used to require human attention.

If those two sentences don't create a strange feeling in your chest, you might be the person this article is written for.

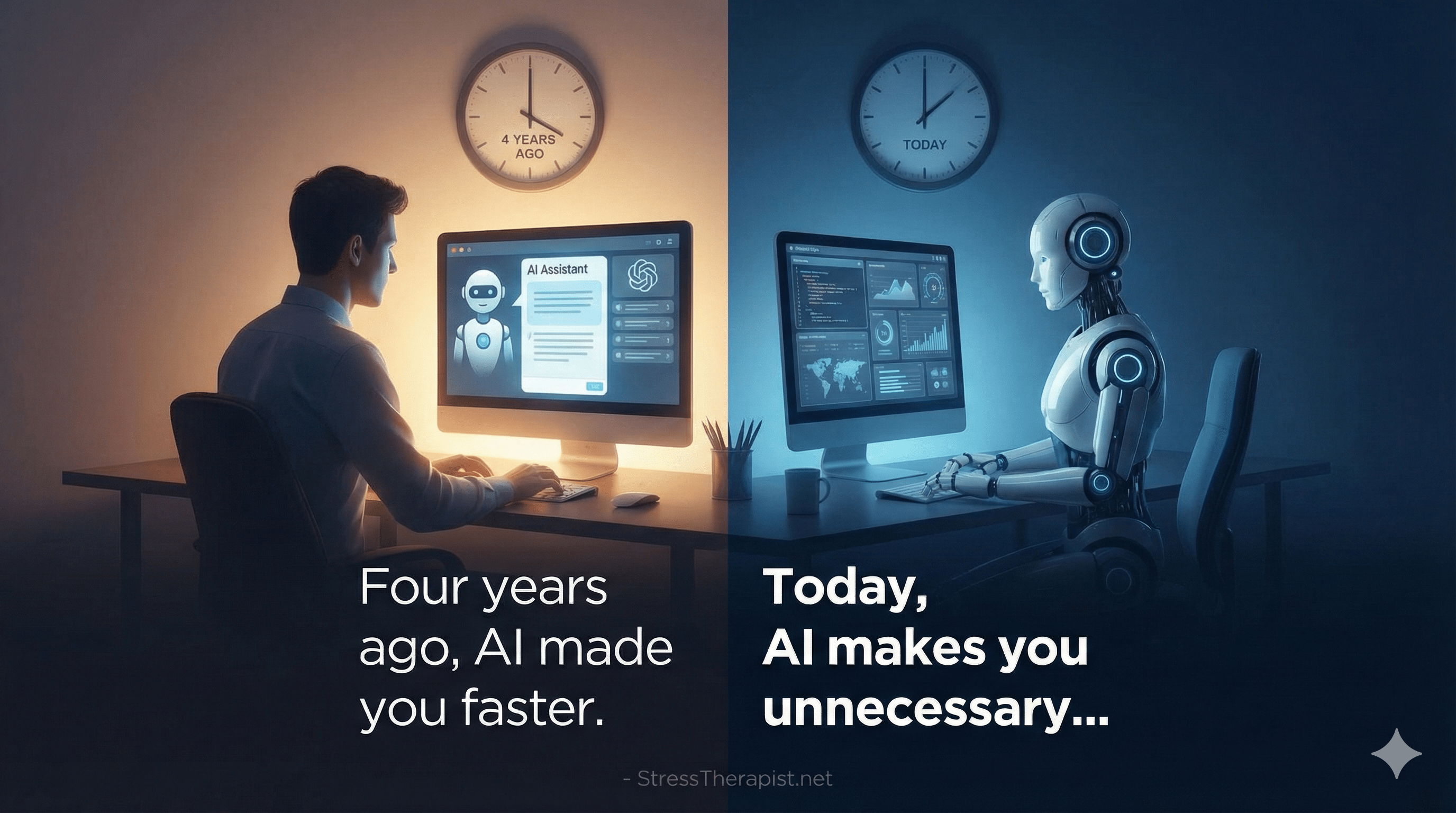

"Four years ago, AI made you faster.

Today, AI makes you unnecessary for most of what you used to do.

That's either terrifying or liberating - depending on whether you've updated your mental model."

The Measurement Problem

Here's something uncomfortable: most people who think they're "keeping up with AI" aren't. They're measuring the wrong thing.

If you're tracking "how much faster AI can write" or "how much better AI can summarise," you're watching the wrong dimension entirely. It's like measuring how fast your car accelerates while missing the fact that cars now drive themselves.

The real metric isn't how much better AI does existing tasks. It's which tasks humans no longer need to do at all.

That's a completely different question. And almost nobody is asking it.

Three Structural Shifts That Changed Everything

Between 2021 and 2025, AI didn't just improve. It changed categories. Like ice becoming water - not warmer ice, but a fundamentally different state.

Three shifts happened simultaneously:

Shift One: From Compression to Agency

In 2021, AI was a compression tool. You had the ideas, the knowledge, the intent. AI just compressed your execution time. You still did the thinking. AI did faster typing, essentially.

In 2025, AI is an agency tool. It thinks, plans, executes, evaluates, and iterates. The bottleneck isn't "how fast can you write" anymore. It's "how clearly can you define what you want?"

That's not a speed improvement. That's a category change.

Shift Two: Humans Moved Outside the Loop

The old process: You think → You prompt → AI generates → You evaluate → You correct → AI regenerates → You evaluate again... Every step required your energy. You were inside every bracket.

The new process: You define the goal → AI handles everything in the middle → You receive the result.

The entire feedback loop collapsed. The human used to be inside every iteration. Now the human defines the goal and steps outside while the system runs fifty refinement cycles in two minutes.

The Hidden Consequence

When iteration becomes free, quality increases, not just speed. A human might do three revision passes before accepting "good enough." An autonomous system does fifty passes in the time you'd take to do one. The output isn't just faster - it's better than what a human would produce.

Shift Three: Capabilities Became Composable

In 2021, AI capabilities were isolated. One tool wrote text. Another generated images. A third summarised documents. You coordinated them. You were the glue.

In 2025, capabilities chain together autonomously. Search, analyse, decide, build, test, revise - all without returning for permission at each step. The system orchestrates itself.

Think of it like cooking. In 2021, you had one chef who could only chop vegetables, another who could only boil water, another who could only plate food. You managed them all.

In 2025, you have one chef who plans the menu, preps ingredients, cooks, plates, cleans up, and adjusts the recipe based on how it turned out - all while you're in the other room.

Why You Can't See This (And It's Not Your Fault)

If you're feeling sceptical right now, that's not a character flaw. It's how human perception works.

Our brains perceive change in the dimensions we're already measuring. If you've been tracking "how well AI writes articles," you'll notice improvements in article-writing. You won't notice when articles become a trivial byproduct of systems that build entire businesses.

The measurement frame itself creates blindness.

It's like someone who's been tracking car fuel efficiency for decades. They'd notice improvements from 25 to 30 to 35 miles per gallon. They might completely miss the emergence of electric vehicles because they weren't measuring "what powers the car" - they were measuring "how far does petrol take you."

You can't perceive emergent properties by examining components. If you ask "what can this AI model do?" you get one answer. If you ask "what can a system using this model plus code execution plus web search plus file management plus iterative refinement do?" you get a completely different answer.

The False Confidence Trap

Here's the uncomfortable truth: the people furthest behind often feel most confident.

If you're using ChatGPT regularly, you probably think you're keeping up. You're learning prompts. You're being responsible about AI adoption. You feel current.

But you might be optimising the wrong layer entirely. Getting really good at ice sculpture while the ice melts into water.

The people who've ignored AI completely are actually easier to reach than the people who've partially adopted it. At least the complete novices know they don't know. The partial adopters have false confidence - they believe they've already adapted.

This isn't meant as criticism. Everyone who didn't spend the last year building autonomous systems is in this position. The change happened faster than any reasonable person could track. That's not a failure of intelligence - it's a fundamental incompatibility between exponential change and human perception.

What This Means For You

If you've read this far with growing discomfort, that discomfort is useful information. It means your mental model is being challenged. That's the first step toward updating it.

Here's the practical reality:

The bottleneck has shifted from execution to specification. The person who wins isn't the best writer or the best coder or the best designer. It's the person who can most clearly define what success looks like. Clarity of thinking now matters more than any individual execution skill.

Much of what you currently do can be delegated. Not "will eventually be possible to delegate" - is possible to delegate right now, today. The question isn't whether it's possible. The question is whether you've tried.

Quality standards are shifting. When iteration is free, "good enough" becomes "actually optimal." The bar for acceptable work is rising because the tools can now iterate until things are genuinely excellent.

New dimensions of value are emerging. Judgment, taste, specification, strategy, relationships - these become more valuable, not less. The human contribution shifts upward, to things that can't be automated. But "things that can't be automated" is a much smaller category than people think.

Two Paths Forward

You now face a choice.

The first path: Dismiss this. File it under "hype" or "not relevant to my work" or "sounds exaggerated." Continue measuring AI progress in old dimensions. Watch speed improvements while missing category changes. Stay confident while falling behind.

This path is comfortable. It requires no action. It preserves your current mental model.

The second path: Test this. Pick something you do regularly that takes more than an hour. Try to build a system that does it. Not perfectly - just functionally. See what happens.

This path is uncomfortable. It requires effort. It might break your mental model.

But here's the thing about mental models: the longer you hold onto an outdated one, the more painful the eventual update becomes. The gap between "what I think is true" and "what is actually true" only grows over time.

A Final Thought

I've spent 27 years as a therapist helping people see realities they'd rather not see. Uncomfortable truths about patterns, relationships, traumas. The most important lesson I've learned: you can't think someone into updating their perception. They have to experience the failure of their current model.

This article can't update your mental model. Only experience can do that.

But maybe, if I've done my job, you now have enough doubt to try something. To test your assumptions. To watch what happens when you ask AI to do something you're confident it can't do.

That's all I can offer: an invitation to discover something for yourself.

The question isn't whether AI has changed. It has.

The question is whether you'll update before the gap becomes a chasm.

What if you spent thirty minutes this week trying to automate something you currently do manually?

Not because you're convinced it will work.

But because you're curious about whether your predictions are still accurate.

About the Author

Adewale Ademuyiwa is a BABCP-registered cognitive behavioural therapist with 27 years of experience in mental health, including over 20 years in NHS settings. He operates StressTherapist.net and runs Private Content Wizard, an AI-powered business that has helped over 12,000 small businesses reduce content creation time from days to minutes.

His work sits at the intersection of human psychology and artificial intelligence - understanding how minds work and how technology can serve human flourishing rather than replace human connection.

Connect: StressTherapist.net | PrivateContentWizard.com

Comments

Leave a Comment